In today’s increasingly connected world, there has been a dramatic shift towards more and more data being shared online.

This free flow of information has been instrumental in helping the internet to grow into an invaluable tool of business and industry. With this growth, however, come concerns around security and privacy as people become more aware of the information that they are sharing. In 2018, the European Union introduced the General Data Protection Regulation(GDPR) to help address some of these concerns, giving consumers (at least in the EU and EEA) far greater ownership and transparency over their own data than ever before.

As artificial intelligence continues to fuel the fourth industrial revolution, similar concerns have naturally been raised around data protection in this space. Companies that implement virtual agent solutions as a contact channel have a duty of care towards their customers to ensure that they handle their personal data in the correct manner.

Today’s consumers can interact instantly with their favorite brands via automated online chat but often have no idea how their information is being protected. In this article, we break down the whys and how of data privacy and security around conversational AI.

Why is my data being stored?

In order for conversational AI to improve itself, it requires very large data sets. Each interaction that a virtual agent has with a customer helps it get better at answering questions and automating requests.

No actual customer data from chat logs is used to train the model for further improvement, however. Chat logs are solely used to monitor the virtual agent in order to identify if there are any inquires that it is unable to respond to. If this is the case, a separate set of artificial training data is then used to train the virtual agent to be able to respond to that specific inquiry.

Keeping the chat log data and the artificial training data separate allows for greater flexibility when it comes to security by taking the customer completely out of the equation. We have also observed that artificial training data actually outperforms real chat logs on the precision of prediction, making it unnecessary to loop in a customer and ask for their permission in order to train the model.

Where is my data stored?

Every message you type into a virtual agent needs to be stored somewhere - even if it’s only temporarily (more on that later). Where that data is stored varies depending on the solution, but a common method (and the one recommended by boost.ai) is in the cloud using Amazon Web Services (AWS).

AWS holds the responsibility of the physical security of a person’s data on their servers in accordance with the AWS shared responsibility model. This states that “AWS is responsible for protecting the infrastructure that runs all of the services offered in the AWS Cloud. This infrastructure is composed of the hardware, software, networking, and facilities that run AWS Cloud services.”

AWS is incredibly transparent about its security practices and has published several public whitepapers on the subject which we encourage you to read if you’re interested in learning more.

Another option is to have all data stored on-premise, i.e. hosted on servers in the physical location of the client. This method cuts out the cloud middleman but can require extensive additional IT resources.

Who has access to my data?

There are always only a limited number of people who can access the data being shared through online chat. This varies depending on the structure of the company or organization that has ownership over the virtual agent.

Support for tiered access means that you can define different roles for users and only allow access on a need-to-know basis.

AWS is only able to access data with prior consent from the client.

How is my data accessed?

Strong authentication methods are a MUST when it comes to the protection of customer data.

Capabilities for two-factor authentication and IP-whitelisting are built into the panel, giving clients the option to add an extra layer of protection for login.

What data can be accessed?

Any information collected in non-authenticated ‘open’ chat is purposely not associated with customers, making it impossible for them to be identified. In addition, partial masking filters can be used to obscure personal data fields such as names, numbers and health information.

Users who need to log into a virtual agent (i.e. to complete a bank transaction) are authenticated using a token so that their identity can be verified.

Full anonymization features are also available for increased data privacy.

When is my data deleted?

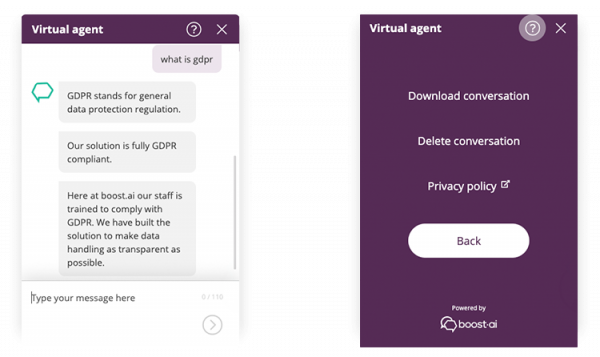

Functionality can be built into a virtual agent allowing customers to delete an entire conversation directly in the chat window.

This is important as it puts control into the hands of consumers giving them the choice of whether or not they wish to keep their interactions with a company private.