Thanks to Automatic Semantic Understanding, AI-powered virtual agents can now go beyond the confines of ordinary chatbots.

Many chatbot solutions today tout the use of deep neural networks, particularly Long Short-Term Memory (LSTM) models, to deliver impressive results. Given the rapid advancements in technology and the growing affordability of cloud computing, this trend is hardly surprising. What is surprising, however, is how often these solutions fall short of their bold promises.

At their core, machine learning and deep learning algorithms identify patterns in training data to differentiate between various targets. Recurrent neural networks (RNNs), such as LSTM, go a step further by not only analyzing adjacent words in a sentence but also considering the broader context—making the order and structure of words critical to understanding.

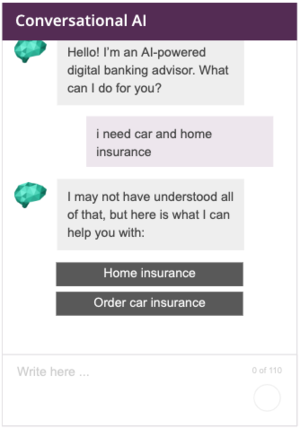

In the following example, the customer asks a simple question about car insurance and gets an appropriate response:

We can see from the backend how the virtual agent interprets the query and has no trouble identifying the correct intent - in this case, ‘Order car insurance’ - with a probability score of 99.42%.

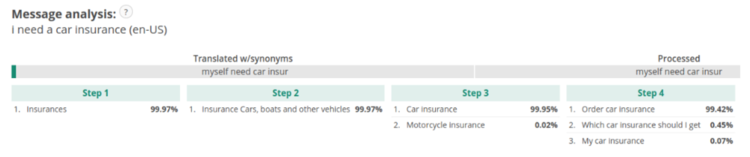

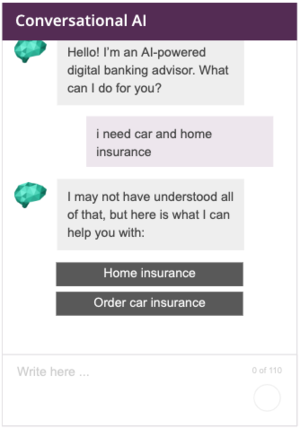

But what if the question is less straightforward:

In this instance, the model becomes confused due to conflicting information in the question. This is the typical outcome you can expect from a lesser chatbot built on LSTM or any other deep neural network - it can manage simple questions easily but tends to sweat the more difficult ones.

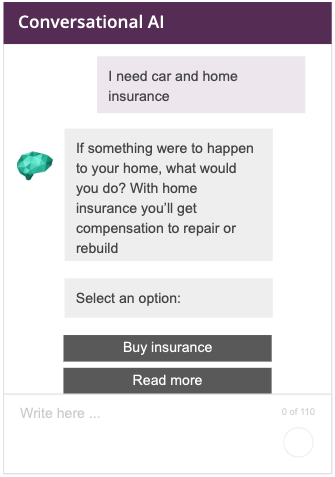

Customers, of course, don’t always have cut and dry queries. Which is why at boost.ai we’ve developed our algorithms with a higher level of understanding so that your customers get replies that look more like this:

A deeper understanding of what customers are actually asking for allows virtual agents built on our algorithms to respond with more helpful answers overall.

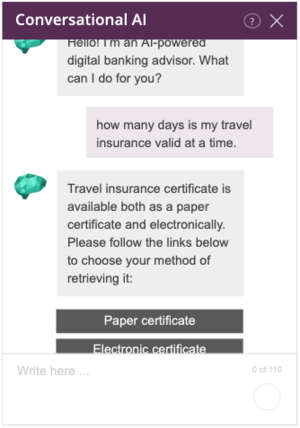

Let’s look at another complex example in which a customer is inquiring about the validity of their travel insurance:

In this example, the model doesn’t have an intent specific to travel insurance validity. The deep learning model generated a response with the closest matching intent but ultimately the answer it arrived at was wrong.

When applying the deeper understanding utilized by conversational AI we get a far better answer:

Rather than immediately responding about travel insurance, the model presents it as an option while acknowledging its uncertainty and clarifying that it doesn’t fully understand the question.

This result is preferable for a couple of reasons:

- It shows the customer that, while the question may not have been fully understood, an approximate answer is offered.

- We can now easily identify on the backend that a ‘validity of travel insurance’ intent should be created to avoid a repeat of this situation.

Introducing Automatic Semantic Understanding (ASU)

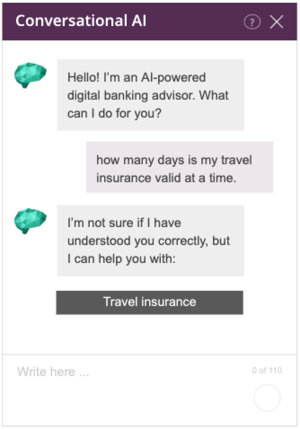

The first step in achieving deeper understanding is to build a good classification model for intents.

Intent predictions are made using a combination of deep learning models such as recurrent neural networks (RNN) and convolutional neural networks (CNN). These are also combined with the general preprocessing steps that are quite standard in NLP -- e.g. stemming, language detection, tokenization, etc.

Through a combination of highly modified/adapted preprocessing steps, and deep learning models, we create a natural language understanding model which identifies the intent and performs far better than any keyword-based model. These deep neural network graphs are then used in the live setting to determine the intent of a user’s message.

The resulting intent models, however, only perform well up to a certain extent, often giving poor predictions when it comes to data that has not been learned by the neural network.

At boost.ai, we have tackled this problem by developing Automatic Semantic Understanding (ASU). By adding these extra neural networks to conversational AI, we are able to offer more complex responses and eliminate bad false positives by over 90%.

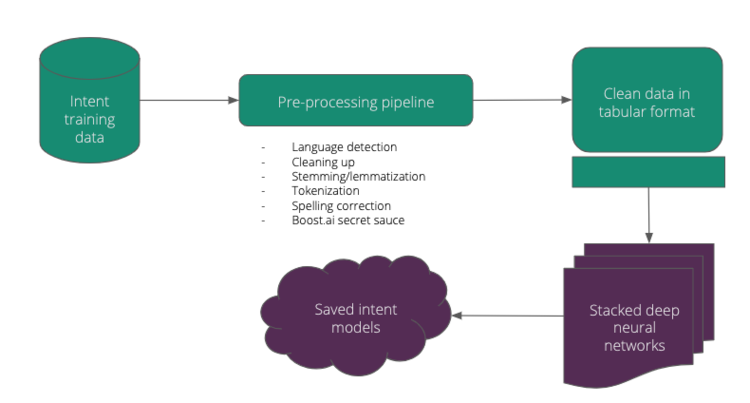

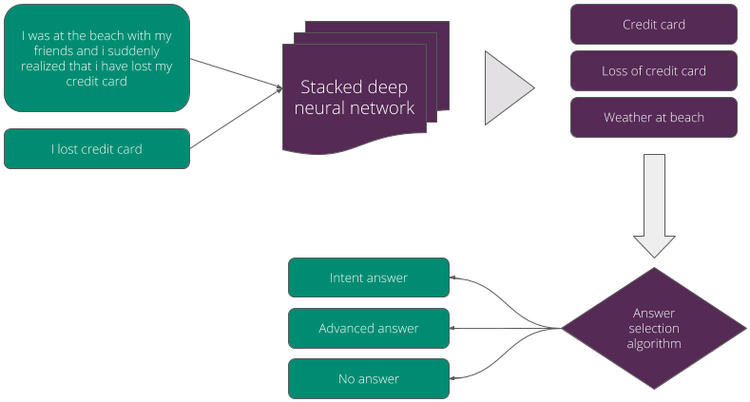

The backbone of ASU is a deep neural network which compresses any message from either training data or live chat into what we call ‘phrases’. Different from what we normally recognize as a phrase in English (or any other language), these try to reduce a message to its simplest form using the aforementioned deep neural network, as illustrated below:

Both the ASU neural network (let’s call it ASUNet) and our reduction algorithm are trained on customer data, just like all other neural networks in boost.ai’s solution. This means they are also able to parse synonyms, acronyms and important words with relative ease.

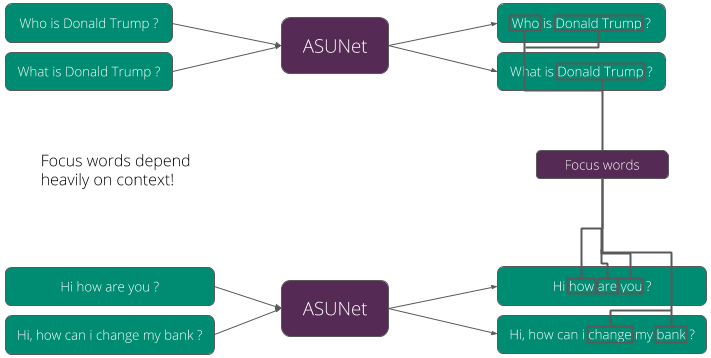

ASUNet is unlike other traditional topic extraction algorithms which are keyword-based. Instead, it extracts focus words depending heavily on the context of the question.

In this example, ASUNet marks the ‘who’ in “Who is Donald Trump?” as a focus word. Conversely, in the second sentence, “What is Donald Trump?”, it recognizes that ‘what’ is not important (likely because it doesn’t make grammatical sense).

The same is true of the second example where we see that “how” is identified as a focus word while ignored once the context of the question changes. Depending highly on context enables us to extract only the most useful focus words, giving them a higher priority before they are sent to the next reduction algorithm.

It should be noted that the reduction algorithm does not always only use focus words to compress a question into the smallest possible phrases. Rather, it combines these focus words (which are categorized by their level of importance) with any remaining words for compression and returns the best result using a voting mechanism.

Once the phrases are generated, they are sent back to the main algorithm (in combination with the original message) which, in turn, decides the appropriate intent. The process is similar to what is shown below:

The answer selection algorithm selects and formats the appropriate response based on the predictions from the stacked deep neural network for all phrases, including the original question:

- Intent answer - typical answer pulled from available intent

- Advanced answer - lets the user know what it does and doesn’t understand, presents the answer as a link

- No answer - avoids giving any answer at all

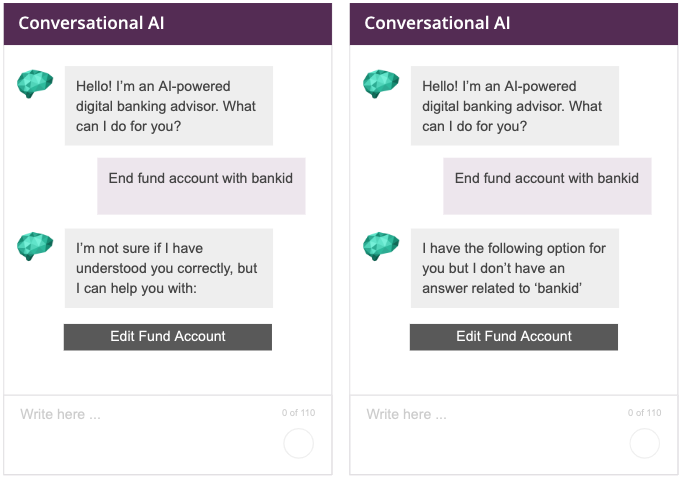

The following figure illustrates two possible advanced answers to the same question:

The response on the left shows a result where the virtual agent responds with information about what it knows. For the response on the right, the user is informed of what it doesn’t understand in the context of the question, yet still provides an answer that might be helpful.

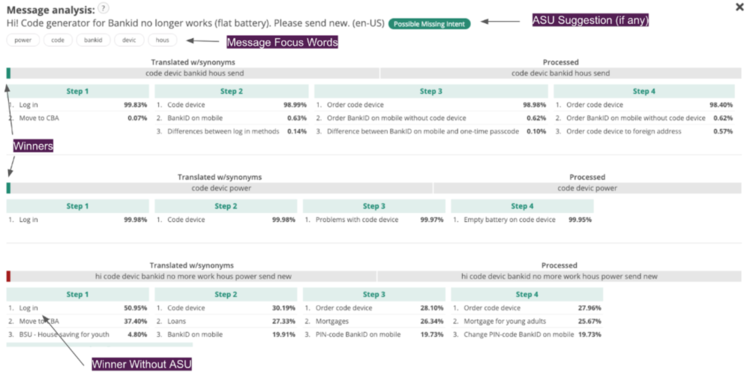

On the backend, we can see the message analysis for the same question:

We see how ASU makes a confused deep learning model more useful. The question would have produced no desirable results without ASU activated, even though the data is available and the virtual agent actually has access to the correct the answer.

Putting ASU into practice

Of course, it’s all well and good if the algorithm works as described in theory, but can it actually deliver results when tested out in the real world?

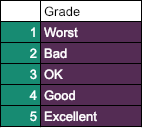

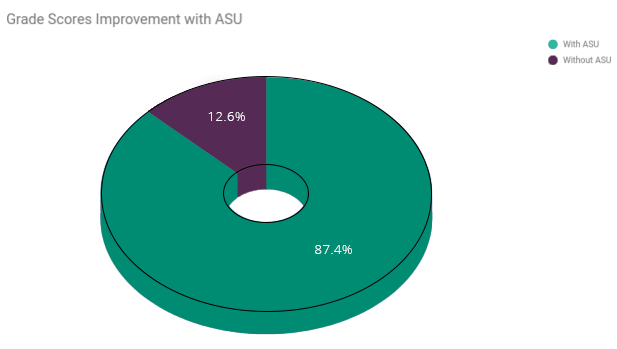

To prove that ASU goes beyond just the theoretical, we randomly selected a few thousand sample user questions and evaluated them on a scale on 1 to 5:

We then compared the results both with and without ASU.

We saw that the percentage of bad replies reduced significantly from 18.3% to 4.5%.

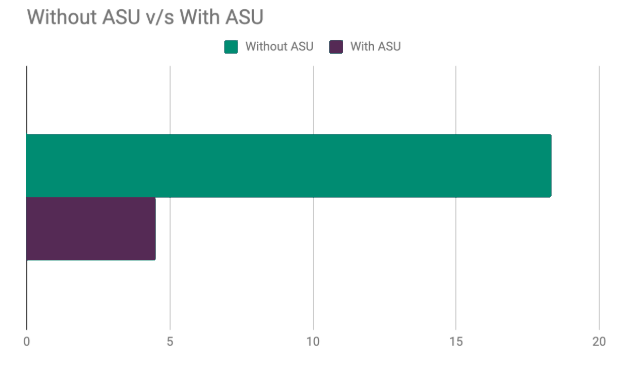

Focusing on false positives, ASU was able to reduce:

- Bad (grade = 1) false positives by 92%

- Combination of grade 1 and 2 false positives by 80%

Furthermore, in instances where the virtual agent would offer too general a response, the activation of ASU turned 52% of those cases into satisfying answers.

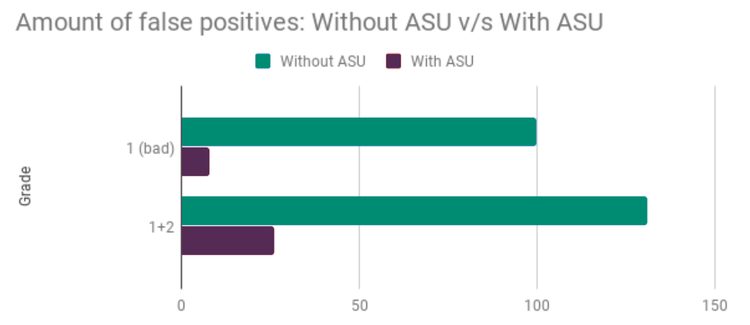

Looking at the questions that had neutral and bad scores, ASU managed to significantly increase the user experience of many of them since 87.4 % of the questions show a quantifiable improvement in their replies.

Another significant outcome is that 5.1% managed to go from the lowest score possible to the highest score possible (i.e. a perfect answer).

The core benefits of ASU

ASU helps our conversational AI platform achieve a deeper understanding of customer interactions in a number of key ways:

It can discern multiple intents in a single question

When a customer asks for several things at the same time - for instance, multiple insurance products - conversational AI can easily distinguish between multiple variables.

It is better at tackling false positives

False positives occur when a customer requests something that is not covered under any intent in the module, yet rather than reply with an ’unknown’ statement, presents a response from the existing intent tree. ASU is able to identify the relevant parts of a message and offer assistance based on existing knowledge while acknowledging the gaps in its ability, minimizing the number of (and harm caused by) these cases.

It understands complex queries

ASU increases conversational AI’s ability to better understand customers with low internet experience, elevating a typical user experience from good to great.

It’s harder to ‘trip up’

The way in which ASU handles inquiries helps to reduce the chances that users find replies that could be used for criticism, negatively affecting a brand.